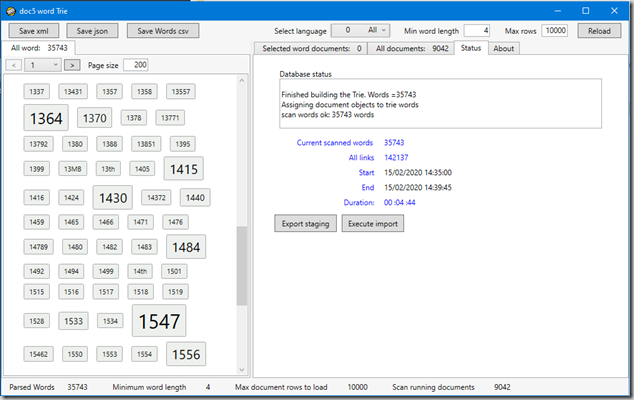

That is a maintenance WPF client application for indexing words found in e-book titles and descriptions for the doc5ync project. (http://doc5.5ync.net/)

Before talking about technical details, let us start by some significant screenshots of the app.

1. scanning All languages’ words for 10000 data records with minimum words length of 4 chars.

After the scan, words are displayed (on the left side of the above figure) highlighting the occurrences of each word (greater font size = more occurrences). This done using a user control itself using a converter.

A simple Border enclosing a TextBlock

<UserControl.Resources>

<conv:TrieWordFontSizeConverter x:Key="fontSizeConverter" />

</UserControl.Resources>

<Grid x:Name="grid_main">

<Border BorderBrush="DarkGray" CornerRadius="2" Background="#FFEDF0ED" Height="auto" Margin="2" BorderThickness="1">

<TextBlock Text="{Binding Word}"

Padding="4px"

VerticalAlignment="Center"

HorizontalAlignment="Center"

FontSize="{Binding ., Converter={StaticResource fontSizeConverter}, FallbackValue=12}"

>

</TextBlock>

</Border>

</Grid>

The converter emphasizes the font size relative to the word’s occurrences:

public class TrieWordFontSizeConverter : IValueConverter

{

public object Convert(object value, Type targetType, object parameter, CultureInfo culture)

{

double minFontSize = 11.0,

defaultFontSize = 12.0,

maxFontSize = 32.0,

size;

if (System.ComponentModel.DesignerProperties.GetIsInDesignMode(new DependencyObject()))

return defaultFontSize;

double min = (double) iWordsCentral.Instance.MinOccurrences,

max = (double) iWordsCentral.Instance.MaxOccurrences;

iTrieWord word = value as iTrieWord;

if( word == null)

return defaultFontSize;

max = Math.Min(9, max);

size = (word.Occurrences / max) * maxFontSize;

if(size > maxFontSize)

return maxFontSize;

if(size < minFontSize)

return minFontSize;

return size;

}

Load, Scan and link words to data items

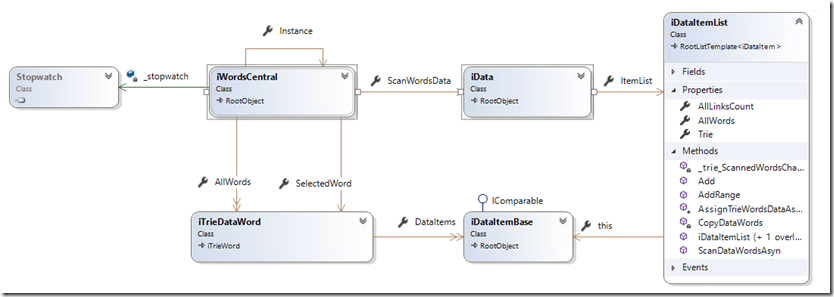

The View Model objects and processing flow

iWordsCentral is the ‘main’ view model (singleton) which provide word scanning and data object assignment through its ScanWordsData (iData object)

ItemList (iDataItemList) is iData’s member responsible for building the Trie (its member) and assigning Trie’s words to its data items.

On Load button click, the MainWindow calls its LoadData() method.

async void ReloadData()

{

await Task.Run(() => iWordsCentral.Instance.LoadData());

}

The method loads data records into (the desired number of records is a parameter… see main figure) and assign it to the ItemsList of the scan object (iData), then calls the iData’s method to build the Trie and assign data items to each of the Trie’s nodes.

_scanWordsData.ItemList = rootList;

bool scanWordsResult = await _scanWordsData.ScanDataWordsAsync(_minWordLength, _includeDocAreaWords, _cancelSource.Token);

The iData object calls its ItemList to do the job… its method proceeds as in the following code

public async Task<bool> ScanDataWordsAsyn(int minWordLength, bool scanRootItems, CancellationToken cancelToken)

{

if(_trie == null)

_trie = new iTrie();

// build a single string with all textual items and parse its words

iTrie trie = _trie;

string global_string = "";

foreach( iDataItem item in this)

global_string += item.StringToParse;

await Task.Run(() => _trie.LoadFromStringAsync(global_string, minWordLength, notifyChanges: false));

_trie.Sort();

List<iTrieWord> trieWordList = trie.AllStrings;

// copy the Trie words (strings) to a DataTrieWord list

CopyDataWords(trieWordList);

// assign words to data items

bool result = await AssignTrieWordsDataAsync(scanRootItems, cancelToken);

return result;

}

The data Item List loops through all its words and data items, calling each data item to assign itself to the given word if it is contained in its data

foreach (var word in _dataWordList)

{

foreach (var ditem in this)

await ditem.AssignChildrenTrieWordAsyn(scanRootItems, word, cancelToken);

}

The data item looks for any of its data where a match of the given word is found and assign those items to the word:

var wordItems = this.Children.Where( i => i.Description != null

&& i.Description.IndexOfWholeWord( word.Word) >= 0);

IndexOfWoleWord note

That is (an efficient) string extension which is important to ensure that one whole word is present in data. I struggled to find a solution for this question, and finally found an awesome solution proposed by https://stackoverflow.com/users/337327/palota

// credit: https://stackoverflow.com/users/337327/palota

public static int IndexOfWholeWord(this string str, string word)

{

for (int j = 0; j < str.Length &&

(j = str.IndexOf(word, j, StringComparison.Ordinal)) >= 0; j++)

if ((j == 0 || !char.IsLetterOrDigit(str, j - 1)) &&

(j + word.Length == str.Length || !char.IsLetterOrDigit(str, j + word.Length)))

return j;

return -1;

}

Finally, as you may have noticed, for performance measurement, a simple StopWatch is embedded into the main view model to notify elapsed time during the process. For this to have sense, all methods are of course async notifying changes through the UI thread (Dispatcher). You might ignore all the async artifacts in the above code to better concentrate on the processing steps themselves.

Presentation

Once all the processing is done, there is still the presentation UI work to do in order to display the document list of a selected word.

This will be the subject of a next post.